Are bad captions better than no captions?

AI-based captioning software is becoming more prevalent thanks to pandemic WFH. But do the people who need them most find them helpful?

In the first part of this article, I explored whether bad image descriptions were better than no image descriptions. After consulting with many blind users, I didn’t find a single one who said they would rather have bad image descriptions.

Now I’m applying the same question in the context of captioning — are bad captions better than no captions at all?

I don’t have to go far to find real-life experience. My daughter has moderately severe bilateral congenital hearing loss. I have an acquired autoimmune hearing loss and a wicked case of tinnitus from decades of NSAID use because of my arthritis. Because I worked as an advocate for people with hearing loss for almost a decade, many of my friends (or their children) have hearing loss.

There are two forms of captioning:

Live captioning, otherwise known as CART (Communication Access Realtime Translation). This involves someone live interpreting and typing the audio stream, using a court reporting-like interface to keep up in real time with what is being spoken.

Automatic captions, usually AI-based, where software interpreted the speech and outputs to the audio stream.

For someone like me, captions help. They aren’t critically essential, but I know after a day of 7 hours of Zoom calls, if I don’t have captions, I probably have a headache at the end of the day and go to bed early. I am also guaranteed to have missed at least one point per meeting. What I miss ranges from subtle to really important. Captions also benefit more visual learners than auditory learners (I am also one of those people).

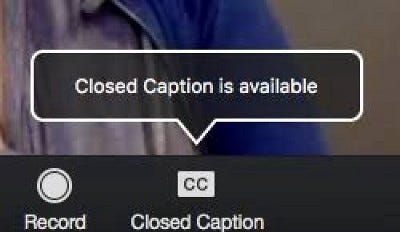

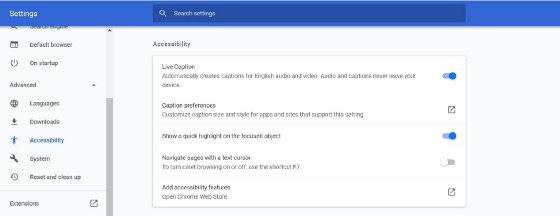

Chrome settings page where you can turn on live captioning and thicker box around the focused object. I use both. They are extraordinarily helpful.

For someone with the hearing loss level my daughter has, captions are essential. It’s challenging for her to follow one of her online classes without them. Fortunately, there is a new auto captioning feature in Chrome that you can access by visiting Settings->Advanced->Accessibility and toggling the “Live Caption” switch. That takes something that wasn’t captioned originally and creates captions for it inside the Chrome window. With this turned on, I am now getting captions for videos that I didn’t even know were playing sound previously, which is kind of amusing.

I am of two minds on this approach. Part of me is thrilled it is available; part of me is sad because it probably alleviates some of the pressure (even though it shouldn’t) on non-captioners like Clubhouse to caption their stuff.

Band-aids don’t eliminate the need for the wound to heal, folx.

Remember that.

Meryl Evans captioning video showing how bad auto-captioning solutions can be.

Even though auto-captioning technology has improved by leaps and bounds over the last year, it is still not perfect. Meryl K. Evans will tell you all about that. She is the queen of captions. One quick look at her video demonstrates several things that can go wrong with autogenerated captions, frequently making them wholly ineffective.

Auto-craptions. These occur when the written words fail to be an accurate reflection of what was stated. The chance of auto-craptions increases when speakers have non-American accents or technical terms/abbreviations are used that are not in the captioning dictionary.

Lack of attribution. When there are multiple speakers in a video, auto-captions rarely tell who is speaking.

Bad punctuation. Automatic captioning rarely comes with accurate punctuation.

Bad multimedia synchronization. For captions to be effective, the captions need to be tightly synchronized to the words being heard in the soundtrack.

Lack of contrast and small or difficult-to-read fonts. Closed captions are vastly preferred by people with hearing loss over burned-in subtitles (aka “open captions”). Closed captions allow the user to control the size, color, and font of the captions to work best with any other disabilities they may experience.

Missing non-verbal sounds. A dog barking. A doorbell ringing. Music. These things add depth to acquiring an equal understanding of what is going on in the video. All you have to do is watch the new Justice League directors cut with captions turned on to realize how important musical descriptions are to understand what is going on if you can’t hear (TL;DR: every single piece of different Amazonian music had the same captioned description — “ancient lamentation”).

How important is captioning accuracy?

Captioning accuracy is the great litmus test for whether people with hearing loss achieve equal access and effective communication.

The US government has set a level of 98.6 % accuracy for captioning legislative events.

Other sources have cited a range of 90–92 % accuracy for equal access.

Why not 100 %? People with hearing loss are good at filling in the blanks for the words that are miscaptioned.

Captioning accuracy is really, really important. But don’t forget the other things highlighted by Meryl as well (punctuation, speakers, non-verbal sounds, synchronization) — those are important too, and 90 % + word accuracy without these things is still not an awesome result for someone who needs captions.

What happens when captioning goes wrong

No surprise, there is a hashtag #CaptionFail for this very topic which identifies hundreds if not thousands of instances of captioning gone very, very wrong.

Confusion

from https://www.3playmedia.com/learn/accessibility/quizzes/caption-fails-what-did-they-really-say/

Apologies for the bad screenshot. The captions at the bottom state, “Seen if you like ur an EPDM asking rent thank you don’t do that soon.”

Confusion is common in auto-captioning. Does anyone want to hazard a guess at what she really said? Visit the link in the image caption and take the quiz.

When something is this badly captioned, the auto-captioning engine is likely struggling with either the person’s voice (pitch, accent, speed) or vocabulary. Chances are the rest of the video captioning will be terrible as well since people’s pitch, accent, and speed rarely change.

The message is changed entirely.

This video is no longer available on YouTube. This screenshot is from https://www.sfcollege.edu/accessibility/electronic-accessibility/canvas/captions

YouTube’s notoriously bad “auto-captioning” attempted to caption Mitt Romney’s “American Strength” video but mistakenly transcribed the phrase “of strength” as “airstrikes.” One word captioned inaccurately can dramatically alter the message.

What turns captions into auto-craptions?

Speech speed — Humans tend to run our words together. French and German speakers are the worst at this. If people struggle to tell where the first-word stops and the next word starts (and this is particularly bad when a word ends in S and the next word starts with S) the captioning engine may not figure it out.

Non-mid-west, North American accents. I had a doctor who was not born in the US who sent me a video she wanted me to watch about cholesterol. The video was auto-captioned, and cholesterol came out continuously as “cluster all.” I knew what the video meant, but it made me question the doctor for using auto-captioning where a mistake could be grave.

Yesterday, I was on a call where an Australian director at my employer said “invisible disability” and it came up as “miserable disability.”

His name is Alister, and it came up as Allosaurus.

This happens, and it happens frequently.

Acronyms — WCAG, WGBH, TKS? Most captioning engines pick the closest word or set of words (I get “woo keg” a lot when I say WCAG as a word and not as individual letters, for example) because it doesn’t recognize the difference between acronyms and words.

Numeronyms — A11y (accessibility), K8S (Kubernetes), I18N (internationalization). These come up in my vocabulary a lot. In auto-captioning, they come up exactly as you would expect, but not what you want.

an eleven y

k (or sometimes kay) eight ess

I (or sometimes eye) eighteen n

Technical terms — Hyperconverged Kubernetes-based Infrastructure, anyone? If it isn’t in the dictionary, an auto-captioning engine will make its best approximation.

non-English terms. — et voila, res ipsa loquitor, adios. English speakers use many non-English terms in our day-to-day language, and auto-captioning engines are not good at making the distinction.

Names — Don’t ask me the number of ways I’ve seen my name butchered. It’s a lot.

We don’t have any (customers/employees/students) with hearing loss

I hear this a lot.

You don’t know that.

Even if you think you know that you actually probably don’t know that. People don’t always disclose, even if you ask them to.

Hearing loss is the most common congenital disability. One in every 100 children is born with hearing loss, and 3 in every 1000 are profoundly deaf.

Even more people (WAY more) acquire hearing loss over time from one or more of aging, ototoxic drugs, viral infections, arthritis, and noise exposure. By age 65, you have a 1 in 3 chance of having a significant level of hearing loss.

LOTS of people use captions for reasons other than hearing loss. Those reasons can include:

Noisy backgrounds

Forgot headphones

Multi-tasking

Visual learners

English language learners

Captioning is not a lot of work these days. They are also the ultimate “checkbook engineering” problem. “Checkbook engineering” was my father’s term for, “you write a small check, and the problem goes away.”

Invest a small amount of time or money, and you can include people who need or want captions.

Ignore the need for captions and send an implicit “you don’t matter” message to people who need captions.

Do the right thing and caption all the time. Do custom captions when you can.

If being inclusive doesn’t resonate with you having the reason to do this, do it because you can eliminate 40 % of your potential lawsuit plaintiffs with a single fix.

If avoiding lawsuits doesn’t resonate with you, before you finalize your “we aren’t going to caption” decision, make sure you read the history of the MIT and Harvard captioning lawsuits (TL; DR; MIT and Harvard fought captioning for 4 years, spent 4 million in legal fees and still ended up agreeing to caption everything in the end).